Performance of the System using Multithreading Processing

Multithreading:

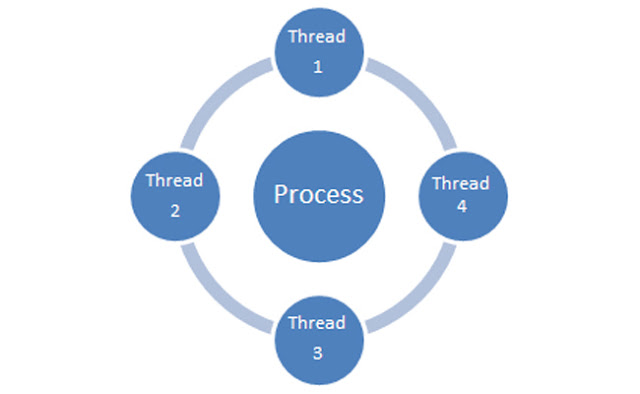

All modern operating systems can run multiple programs at the same time. Normally those programs are using some area of the memory, which is allocated to them by the operating system. That way they won't be able to interfere with one another. However, this type of isolation results in certain amount of overhead in terms of processing time. As an alternative we use multithreading concept which is a light weight process that improves the utilization of CPU by implementing group of related threads that belongs to the same process that share code section, data section and other OS resources i.e.Files.

Each thread in the program has

1) Thread ID

2) Program Counter

3) Register Set

4) Stack

Let's assume we have a process that has its own code, data and file sections and we break this process task into sub units known as threads i.e. T1, T2 and T3. As a result, each thread contains it's own register stack value and code, on the other hand data and file sections are shared to all the threads.

Amdahl's Law:

Amdahl's law states that, in parallel computing, if P is the proportion of the program that can be made parallel, and 1-P is the proportion of the program that will remain serial, then maximum number of speedup that can be achieved using N number of processors is 1/((1-P)+(P/N)).

In other words, Amdahl's Law provides a formula which is used to find the peak level of improvement possible by just improving a particular part of the system. It is often used in parallel computing to predict the theoretical speedup when using multiple processors.

We can achieve parallelism in the system using multi-threading concept. As a result, most modern applications are multithreaded and multiple tasks in the application can be implemented by separate threads i.e.

- Update display

- Fetch Data

- Spell Checking

- Answer a Network Request

Advantages of Multithreading :

1) It provides responsiveness to the program execution that means it allow continued execution if part of the process is blocked. It is important to User Interfaces.

2) It allows Resource Sharing of the process which is easier than shared memory or message passing.

3) Process can take an advantage of multiprocessor architecture.

4) Process creation is heavy weighted while thread creation is light weighted.

5) Threads are cheap in the sense that :

- They only need stack and storage for registers therefore, threads are cheap to create in terms of space occupation in the operating system.

- Threads use very few resources of an operating system in which they are working. That is, threads don't need new address space, global data, program code or operating system resources.

- Context switching are faster in multithreading because it only needs to save and/or restore PC, SP and registers, which eventually enhances the performance of the system.

But this advantages does not come free-the biggest drawback is there is no protection between threads.

- Security: Since there is an extensive sharing between the threads,there is a potential problem of security. It is quite possible that one thread overwrites the stack of another thread(or damaged shared data). Hence ,synchronization is needed between the threads.

- Blocking: The major disadvantage is if the kernel is single threaded, a system call of one thread will block the whole process of the system and CPU may be idle during the blocking period.

Below are the programs that depicts how multi threading can affect a system's performance if we over limit the number of threads.

1) 1thread.c

/*On Simics compile with "gcc thread.c -pthread"

on CygWin, -pthread option is not required */

#include <pthread.h>

#include <stdio.h>

//cache blocks are 32B, make sure array and sum elements fall on different blocks

#define NUMBER_OF_ROWS 5120

#define NUMBER_OF_COLS 100000

//shared arrays named "array" and "sum", variable "count", and lock location named //"lock_var"

int array[NUMBER_OF_ROWS][NUMBER_OF_COLS];

float sum[NUMBER_OF_ROWS];

void *FindRowSum(void *arg)

{

int *pid = (int *) arg;

int index, j;

for(index = 0; index < NUMBER_OF_ROWS; index++)

{

for (j=0; j< NUMBER_OF_COLS; j++) //assume array data are read here

array[index][j] = index;

sum[index] = 0; //start computations (assume a complex one)

for (j=0; j<NUMBER_OF_COLS; j++)

{

sum[index] = sum[index] + array[index][j];

}

printf("pid = %d Sum[%d] = %f\n", *pid, index, sum[index]);

}

return NULL;

}

int main (void)

{

int i, pid;

printf("1T: PID 0 started: time = %f\n", (double) time(NULL));

pid = 0;

FindRowSum(&pid);

printf("1T: PID 0 ended: time = %f\n", (double) time(NULL));

return 0;

}

2) 2threads.c

#include <pthread.h>

#include <stdio.h>

#define NUMBER_OF_ROWS 5120

#define NUMBER_OF_COLS 100000

#define NUMBER_OF_ADDITIONAL_THREADS 1

//shared arrays named "array" and "sum", variable "count", and lock location named "lock_var"

int array[NUMBER_OF_ROWS][NUMBER_OF_COLS];

float sum[NUMBER_OF_ROWS];

int count = 0; //define a shared count, initialized to zero

pthread_mutex_t lock_var; //define a shared lock variable (a system level variable)

int FetchAndIncrement()

{

int t;

pthread_mutex_lock(&lock_var); //lock

t = count;

count = count + 1;

pthread_mutex_unlock(&lock_var); //unlock

return(t);

}

void *FindRowSum(void *arg)

{

int *pid = (int *) arg;

int index, j;

index = FetchAndIncrement();

while(index < NUMBER_OF_ROWS)

{

for (j=0; j< NUMBER_OF_COLS; j++)

array[index][j] = index; //assume array data are read here

sum[index] = 0; //start computations (assume a complex one)

for (j=0; j<NUMBER_OF_COLS; j++)

{

sum[index] = sum[index] + array[index][j];

}

printf("pid = %d Sum[%d] = %f\n", *pid, index, sum[index]);

index = FetchAndIncrement();

}

return NULL;

}

int main (void)

{

pthread_t threadID[NUMBER_OF_ADDITIONAL_THREADS];

void *exit_status;

int i, pid[2];

printf("started: time = %f\n", (double) time(NULL));

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

{

//create 1 aditional threads to run

pid[i] = i;

pthread_create(&threadID[i], NULL, FindRowSum, &pid[i]);

//&i would also work but print pid info above will be incorrect

}

pid[1] = 1;

FindRowSum(&pid[1]);

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

pthread_join(threadID[i], &exit_status);

printf("Ended: time = %f\n", (double) time(NULL));

return 0;

}

#include <pthread.h>

#include <stdio.h>

//cache blocks are 32B, make sure array and sum elements fall on different blocks

#define NUMBER_OF_ROWS 5120

#define NUMBER_OF_COLS 100000

//shared arrays named "array" and "sum", variable "count", and lock location named //"lock_var"

int array[NUMBER_OF_ROWS][NUMBER_OF_COLS];

float sum[NUMBER_OF_ROWS];

void *FindRowSum(void *arg)

{

int *pid = (int *) arg;

int index, j;

for(index = 0; index < NUMBER_OF_ROWS; index++)

{

for (j=0; j< NUMBER_OF_COLS; j++) //assume array data are read here

array[index][j] = index;

sum[index] = 0; //start computations (assume a complex one)

for (j=0; j<NUMBER_OF_COLS; j++)

{

sum[index] = sum[index] + array[index][j];

}

printf("pid = %d Sum[%d] = %f\n", *pid, index, sum[index]);

}

return NULL;

}

int main (void)

{

int i, pid;

printf("1T: PID 0 started: time = %f\n", (double) time(NULL));

pid = 0;

FindRowSum(&pid);

printf("1T: PID 0 ended: time = %f\n", (double) time(NULL));

return 0;

}

2) 2threads.c

#include <pthread.h>

#include <stdio.h>

#define NUMBER_OF_ROWS 5120

#define NUMBER_OF_COLS 100000

#define NUMBER_OF_ADDITIONAL_THREADS 1

//shared arrays named "array" and "sum", variable "count", and lock location named "lock_var"

int array[NUMBER_OF_ROWS][NUMBER_OF_COLS];

float sum[NUMBER_OF_ROWS];

int count = 0; //define a shared count, initialized to zero

pthread_mutex_t lock_var; //define a shared lock variable (a system level variable)

int FetchAndIncrement()

{

int t;

pthread_mutex_lock(&lock_var); //lock

t = count;

count = count + 1;

pthread_mutex_unlock(&lock_var); //unlock

return(t);

}

void *FindRowSum(void *arg)

{

int *pid = (int *) arg;

int index, j;

index = FetchAndIncrement();

while(index < NUMBER_OF_ROWS)

{

for (j=0; j< NUMBER_OF_COLS; j++)

array[index][j] = index; //assume array data are read here

sum[index] = 0; //start computations (assume a complex one)

for (j=0; j<NUMBER_OF_COLS; j++)

{

sum[index] = sum[index] + array[index][j];

}

printf("pid = %d Sum[%d] = %f\n", *pid, index, sum[index]);

index = FetchAndIncrement();

}

return NULL;

}

int main (void)

{

pthread_t threadID[NUMBER_OF_ADDITIONAL_THREADS];

void *exit_status;

int i, pid[2];

printf("started: time = %f\n", (double) time(NULL));

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

{

//create 1 aditional threads to run

pid[i] = i;

pthread_create(&threadID[i], NULL, FindRowSum, &pid[i]);

//&i would also work but print pid info above will be incorrect

}

pid[1] = 1;

FindRowSum(&pid[1]);

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

pthread_join(threadID[i], &exit_status);

printf("Ended: time = %f\n", (double) time(NULL));

return 0;

}

3) 4threads.c

#include <pthread.h>

#include <stdio.h>

#define NUMBER_OF_ROWS 5120

#define NUMBER_OF_COLS 100000

#define NUMBER_OF_ADDITIONAL_THREADS 3

//shared arrays named "array" and "sum", variable "count", and lock location named "lock_var"

int array[NUMBER_OF_ROWS][NUMBER_OF_COLS];

float sum[NUMBER_OF_ROWS];

int count = 0; //define a shared count, initialized to zero

pthread_mutex_t lock_var; //define a shared lock variable (a system level variable)

int FetchAndIncrement()

{

int t;

pthread_mutex_lock(&lock_var); //lock

t = count;

count = count + 1;

pthread_mutex_unlock(&lock_var); //unlock

return(t);

}

void *FindRowSum(void *arg)

{

int *pid = (int *) arg;

int index, j;

int ct1=0,ct2=0,ct3=0,ct4=0;

index = FetchAndIncrement();

while(index < NUMBER_OF_ROWS)

{

for (j=0; j< NUMBER_OF_COLS; j++)

array[index][j] = index; //assume array data are read here

sum[index] = 0; //start computations (assume a complex one)

for (j=0; j<NUMBER_OF_COLS; j++)

{

sum[index] = sum[index] + array[index][j];

}

if(*pid == 0)

{

ct1++;

}

else if(*pid == 1)

{

ct2++;

}

else if(*pid == 2)

{

ct3++;

}

else if(*pid == 3)

{

ct4++;

}

//printf("pid = %d Sum[%d] = %f\n", *pid, index, sum[index]);

index = FetchAndIncrement();

}

printf("\n count t1:=%d count t2:=%d count t3:=%d count t4:=%d\n",ct1,ct2,ct3,ct4);

return NULL;

}

int main (void)

{

pthread_t threadID[NUMBER_OF_ADDITIONAL_THREADS];

void *exit_status;

int i, pid[4];

printf("started: time = %f\n", (double) time(NULL));

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

{

//create 3 aditional threads to run

pid[i] = i;

pthread_create(&threadID[i], NULL, FindRowSum, &pid[i]);

//&i would also work but print pid info above will be incorrect

}

pid[3] = 3;

FindRowSum(&pid[3]); //main is the 4th thread

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

pthread_join(threadID[i], &exit_status);

printf("Ended: time = %f\n", (double) time(NULL));

return 0;

}

4) 10threads.c

#include <pthread.h>

#include <stdio.h>

#define NUMBER_OF_ROWS 5120

#define NUMBER_OF_COLS 100000

#define NUMBER_OF_ADDITIONAL_THREADS 9

int array[NUMBER_OF_ROWS][NUMBER_OF_COLS];

float sum[NUMBER_OF_ROWS];

int rowcount[10] = {0};

int count = 0; //define a shared count, initialized to zero

pthread_mutex_t lock_var;

int FetchAndIncrement()

{

int t;

pthread_mutex_lock(&lock_var); //lock

t = count;

count = count + 1;

pthread_mutex_unlock(&lock_var); //unlock

return(t);

}

void *FindRowSum(void *arg)

{

int *pid = (int *) arg;

int index, j,count;

index = FetchAndIncrement();

while(index < NUMBER_OF_ROWS)

{

for (j=0; j< NUMBER_OF_COLS; j++)

array[index][j] = index; //assume array data are read here

sum[index] = 0;

for (j=0; j<NUMBER_OF_COLS; j++)

{

sum[index] = sum[index] + array[index][j];

}

for(count=0;count<10;count++)

{

if(*pid == count)

{

rowcount[count]++;

}

}

index = FetchAndIncrement();

}

return NULL;

}

int main (void)

{

pthread_t threadID[NUMBER_OF_ADDITIONAL_THREADS];

void *exit_status;

int i, pid[10],tid;

printf("started: time = %f\n", (double) time(NULL));

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

{

pid[i] = i;

pthread_create(&threadID[i], NULL, FindRowSum, &pid[i]);

}

pid[9] = 9;

FindRowSum(&pid[9]);

for(i=0; i<NUMBER_OF_ADDITIONAL_THREADS; i++)

pthread_join(threadID[i], &exit_status);

for(tid=0;tid<10;tid++)

{

printf("number of rows count of thread%d = %d\n",tid,rowcount[tid]);

}

printf("Ended: time = %f\n", (double) time(NULL));

return 0;

}

Observations:

- Program's execution time for different number of threads:

2. Time spent on each row by each program:

We can depict from the charts that 2 thread program is twice faster than 1 thread program and same scenario for 2 thread program vs 4 thread program. On the other hand, 10 thread program takes double time compare to 4 thread program because of the overhead in the program execution. Each thread takes small amount of time to communicate with other threads because multithreading shares the same address space hence there should be synchronization between each thread in order to get security in the program. Therefore, when threads are increased to 10 processing is increased compare to time taken by the 4 thread program which will result in decrease in the speedup and the performance.

Good one

ReplyDeleteThank you..!:)

DeleteKeep sharing really help full MR rutansh Trivedi ✌️👍

ReplyDeleteThank you..! Sure..!:)

DeleteWell explained....keep sharing

ReplyDeleteThank you...! Sure..!:)

Deletegood one

ReplyDeleteThank you brother..!:)

DeleteThis comment has been removed by the author.

ReplyDelete